By now, you probably know that generative AI (GenAI) is a double-edged sword—it introduces both opportunities and risks. On the one hand, GenAI improves productivity, especially when it comes to content creation, troubleshooting, research and analysis. On the other hand, it creates various security risks that people have yet to wrap their heads around.

In this blog post, we’re going to explore the most common risks associated with generative AI so you can educate your users accordingly.

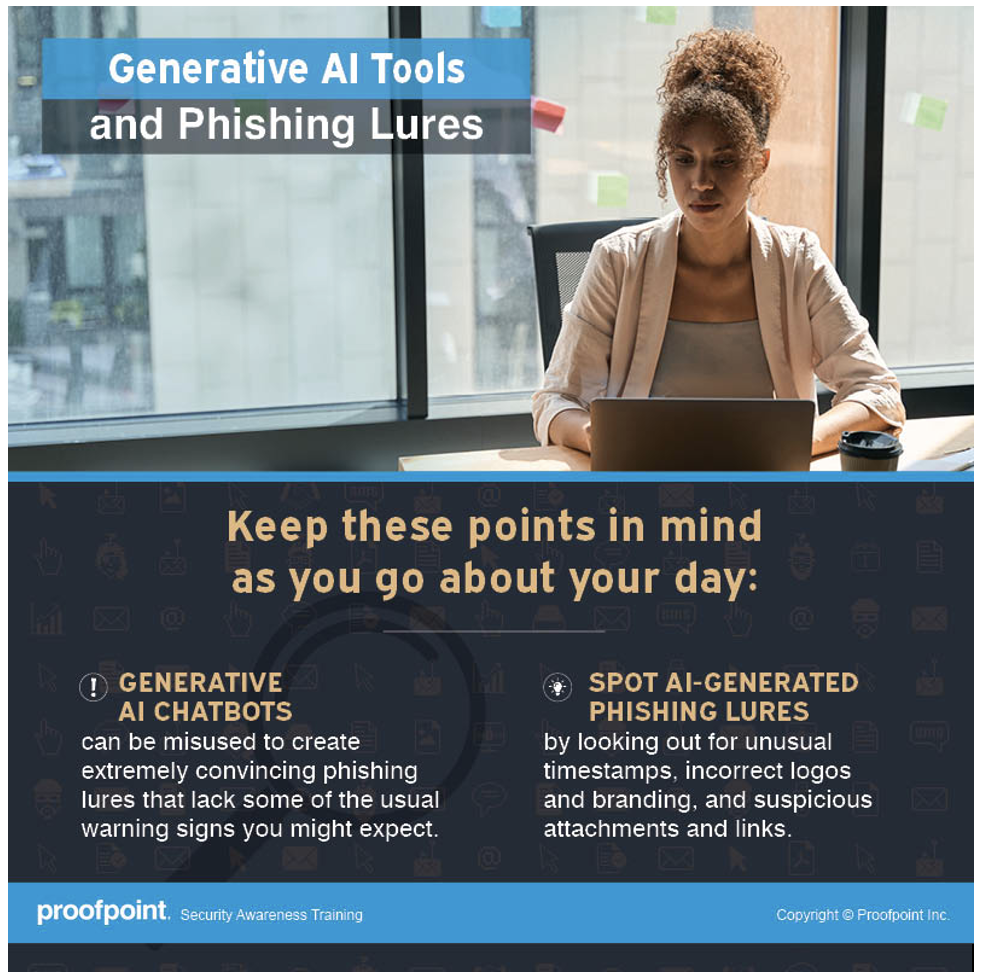

GenAI is used to create convincing lures

Cybercriminals certainly benefit from GenAI as it helps them to overcome language barriers and even cultural ones. Research for the 2024 State of the Phish report from Proofpoint found that business email compromise (BEC) attacks have become more prevalent in non-English speaking countries. Part of the reason for that trend is the rise of GenAI.

Back in the day, we taught people to pay attention to poor grammar and misspellings in an email because those are the obvious signals of a phish. Not anymore.

With GenAI, phishing messages have become more well-crafted. Grammatical errors are no longer a strong indicator of a phish. Threat researchers would argue that the most sophisticated phishing messages are still manually created by bad actors. It’s just that now adversaries have tapped into the power of GenAI to raise the volume, scale and sophistication of their attacks.

What your people should know

Don’t trust any sender, even if the email seems to come from someone you know or from an authority. Pay close attention to other signs of a phish, like a sense of urgency, an unexpected sender, mismatches between a sender’s display name and email address, and a request for payment or sensitive data.

An infographic about GenAI and phishing lures that is available with Proofpoint Security Awareness.

GenAI can be a source of data leaks

To increase productivity, some people will upload their work to GenAI sites, looking for technical or editing assistance or data analysis. Sometimes, their work involves sensitive information like source code, customer information, or a production process or secret recipe.

What most people aren’t aware of is that these GenAI sites or AI chatbots may store any information that is shared with them. Developers train the AI chatbots with users’ inputs and responses to improve their performance. As a result, these chatbots could accidentally share sensitive data with other users, either within or outside your organization, resulting in potential data breaches and posing risk to intellectual property (IP).

What your people should know

Anything you share with a public GenAI chatbot may be shared with others. Be careful not to share any sensitive information or upload any sensitive data to a GenAI site. This could lead to a loss of IP, regulatory violations and data breaches.

GenAI hallucinations mean outputs are not always trustworthy

GenAI chatbots appear to know EVERYTHING. When you enter a question, they always spit out answers that seem to make sense. However, if a chatbot is trained with inaccurate or biased data, any information it generates may be factually wrong. For example, imagine an AI chatbot that is trained with data suggesting the earth is flat.

AI models are trained on data to find patterns and to make predictions. If an AI chatbot is trained with incomplete or biased data, it will pick up incorrect patterns and generate inaccurate, incomplete or skewed information. This is known as an AI hallucination. Common examples include:

- Factually incorrect information. The text or the statement generated by an AI chatbot appears true when it is not (or it is partially true). This is one of the most common forms of AI hallucination.

- Misinformation. GenAI generates false information about an entity or a real person, mixing in pieces of false information. Some users may take those fabricated prompts as truth.

- Failure to provide the full context. An AI chatbot may generate a general statement without giving users all the information that they need. For instance, if someone queries about whether it is safe to eat peanut butter, the chatbot may not provide sufficient details, like the risk of an allergic reaction to peanuts, to help the person make a judgment call.

What your people should know

Be skeptical of all information from an AI chatbot. Do your own research from other sources and fact-check anything chatbots claim is true. Additionally, remember that many AI chatbots are not designed for specific uses such as conducting scientific research, citing law and cases, or predicting the weather. You will still get an answer, but it may not be accurate.

GenAI makes it easy to create deepfakes

Deepfakes are AI-generated fake images or videos that are manipulated to replace a person’s face or voice. They are generated by a kind of machine learning called deep learning. While deepfakes emerged before GenAI tools became popular, those tools make deepfakes so easy to create as GenAI uses algorithms to generate content based on existing examples and data.

Deepfakes have become a great weapon for cybercriminals to launch attacks, especially toward large enterprises. For example, attackers could impersonate executives like the CEO or the CFO of the company using deepfakes to force fraudulent payments.

Emerging technology like GenAI makes deepfakes easier and cheaper to create, so it’s only a matter of time for attackers to start targeting companies of all sizes. And unfortunately, there isn’t a technology or solution that can effectively detect and stop malicious deepfakes. The best bet is to educate your employees about deepfakes, establish an internal process and create mitigation policies.

What your people should know

Always validate the request with the requester through a different channel. If people get a phone call or a video call from a well-known leader or professional asking them to do something immediately, it’s recommended that they verify with that person via a direct phone line (in person, if possible).

The bottom line: Don’t trust anyone. Use common sense. If something seems odd, report it to the security team.

An infographic about AI-generated deepfakes that is available with Proofpoint Security Awareness.

Make user education a part of your security efforts

An old Chinese proverb wisely warns: “The water that supports the boat can also engulf it.” In our current world, excitement abounds over the convenience that generative AI brings and the promising future it seems to herald. However, it is crucial not to overlook the risks associated with this rapidly emerging technology.

As you strategize and prioritize security efforts to address GenAI risks, make sure that user education is part of the plan. As AI chatbots become more widely adopted, your employees need to learn about the benefits and the risks of GenAI so they can better protect themselves and your business.

Cybersecurity awareness month is approaching. Start planning now and be sure to incorporate risks of generative AI into your program. To learn more about how to foster a culture of vigilance that will turn people from passive targets into active defenders, join our special webinar series on August 14 and 28.