As a cybersecurity administrator tasked with educating your entire organisation, you face a significant challenge. Your mission is to train employees on the dangers of phishing and the increasingly sophisticated tactics employed by cybercriminals. So, where do you begin?

For the past two decades, the standard recommendation has been a two-part educational strategy. First, provide training to explain key cybersecurity concepts. Then, follow up by assessing the effectiveness of that training through high-fidelity simulations.

But after 20 years of relying on this approach, it’s natural to ask: How effective is it? What works? And under which conditions do these interventions yield the best results?

Early evidence from laboratory and real-world studies suggested that cybersecurity training and phishing simulations were effective for training non-experts (Kumaraguru et al., 2007). However, recent large-scale, real-world evaluations showed otherwise. These evaluations—which employed large sample sizes, random assignment and control groups—found almost zero efficacy for annual training and phishing programmes (Lain et al., 2022; Ho et al., 2025). How can we explain this?

A common explanation for this discrepancy is that laboratory findings often fail to translate to real-world settings. After all, lab experiments are controlled and contrived, sidestepping the messy complexities of actual workplaces. While this explanation is likely true, it barely scratches the surface. The reality is far more nuanced—and much more intriguing.

This blog post explores findings from a recent study that evaluates the effectiveness of annual cybersecurity awareness training and phishing programmes. By examining these results through the lens of cognitive and learning sciences, we reveal why these outcomes are not only unsurprising but also predictable. Finally, we’ll provide actionable advice on how to move forward.

Evaluating the foundational components

In their article ‘Understanding the Efficacy of Phishing Training in Practice’, the authors evaluate two foundational components of cybersecurity awareness and training, which they refer to as:

- Annual cybersecurity awareness training. This training is typically in the form of a series of online videos that explain the various concepts of cybersecurity. Videos cover topics like the definition of phishing, what to look out for and how to report it. They also cover the consequences of falling for a scam. Training runs once a year, and it’s usually given on the anniversary of the employee’s hire date.

- Embedded anti-phishing training exercises. These exercises occur after a learner clicks on a link in a simulated phishing campaign.

Now we’ll examine each of these components in greater detail.

1: Annual cybersecurity awareness training

According to the theoretical frameworks and the empirical findings from cognitive science, how surprised should we be that typical annual training only decreases click rates by 1.7% (Ho et al., 2025; p. 9)? We would argue that it’s not surprising at all. This is for two reasons.

First, we all know that forgetting is unavoidable. In fact, it’s such a pervasive and regular component of memory that cognitive scientists have built mathematical models that calculate the probability of remembering a piece of information at some time interval after initial learning. It’s called the power law of forgetting. And it generalises across many different topics and domains (Anderson & Schooler, 1991), including cybersecurity training (Reinheimer et al., 2020). According to this law, an individual is expected to forget 78.7% of the information after 30 days.

Second, we also know that learning by watching a video is going to result in shallow learning if other learning activities, such as problem solving or applying the knowledge, don’t accompany it. In many experiments, conducted both in the laboratory and in the classroom, the baseline control group either watches a video or reads a textbook passage. These groups often perform far below those receiving other forms of instruction.

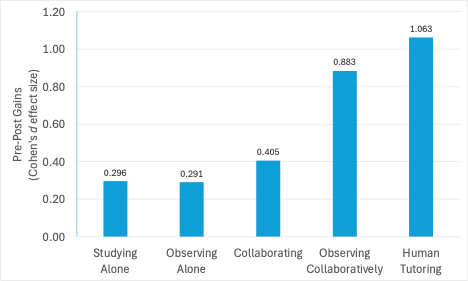

For example, consider the following laboratory experiment (Chi, Roy & Hausmann, 2008). Students were asked to learn how to solve introductory physics problems in one of five conditions:

- One-to-one human tutoring

- Watching a video of the tutorial interaction together

- Problem solving together (with no video; text only)

- Watching the tutorial video alone

- Studying alone (without video; text only)

When tackling the more challenging aspects of physics problems, no significant differences were observed between students who studied alone and those who watched instructional videos in isolation. Both groups demonstrated significantly lower learning gains compared to the other three conditions, which included collaboration with either a peer or a human tutor (see Figure 1).

This aligns with research in cybersecurity training, where annual video-based sessions, delivered without follow-up problem-solving or interactive components, often fail to produce meaningful learning outcomes.

The data highlights a crucial takeaway: relationships, mentorship and opportunities to ask questions are vital for effective learning. These elements foster deeper understanding and skill development, making them essential not just for corporate functions but for teaching critical life skills as well.

Figure 1. Human tutoring, observing collaboratively and collaborating result in statistically stronger learning gains than observing alone or studying alone.

2: Embedded anti-phishing training exercises

If a learner clicks on a link in a simulated phishing campaign and fails a simulated phish, their browser launches them to a page that we refer to as a teachable moment. In their study, the authors experimentally manipulated the design of this teachable moment. In addition to a control group, they employed four different designs, all taken from Proofpoint’s ZenGuide™ simulation platform.

Here are the possibilities for what happened next after a learner failed a simulated phish:

- Control message. The user was taken to a 404-error page; no training was provided.

- Generic static message. The user received a ‘generic’ message, which means it only provided general advice on the five features to look out for when receiving an unknown message. ‘Static’ means the user was only required to read it.

- Contextual static message. Although the message was static, the original email from the campaign was embedded in the teachable moment. It was also marked up with indicators—NIST refers to these as ‘cues’—to show the user what they should look for. Proofpoint refers to these cues as Phish Hooks.

- Generic interactive exercise. Instead of just reading a message, the learner had to answer a series of questions while they studied the teachable moment. In addition, they viewed a generic sample email message, which wasn’t marked up in any meaningful way.

- Contextual interactive exercise. Just like the previous teachable moment, the user had to answer a series of questions. However, this time the sample email was replaced with the actual email that was sent to their inbox. In addition, it was marked up with Phish Hooks, which showed them indicators they should be on the lookout for.

The authors were careful to note that only learners who failed a phishing simulation got the above instructional intervention. Those who passed didn’t receive any additional instruction.

What did they find? Overall, there was a 9.5% reduction in phishing failures compared to the control group (p. 9). However, that number jumped significantly to 19% when the most effective teachable moment—contextual interactive (Phish Hooks)—was focused on. This significant reduction only happened if learners completed their cybersecurity training (p. 12). We will touch on this point in the next section because it underscores the importance of annual training and simulation.

Contextual interactive learning is more effective

Viewed from a cognitive science perspective, are these findings surprising? Again, the answer is no. Intuitively, we all understand that learning by doing is important. Learning how to ride a bike by watching a YouTube video isn’t the same as finding an empty car park, hopping on a bike and trying (and falling!) until you figure it out.

This intuition is supported by data. In a study conducted within an online course hosted by Carnegie Mellon University, researchers found that students who actively engaged in interactive learning experiences, such as tutored problem solving, received a better course grade than those who just watch videos or read the course material. Moreover, they spent 10–20% less time on the course (Carvalho, McLaughlin & Koedinger, 2017; Koedinger et al., 2015)! This finding from an online course is consistent with what we see in real-world cybersecurity training. As you make learning experiences more interactive, you can expect better outcomes (Chi & Wylie, 2014).

Moving forward: When should contextual interactive learning be used?

With contextual interactive learning moments, timing is critical. While it might seem logical to deploy them immediately after an assessment, there are two significant issues with this approach:

- Metric integrity. Immediate feedback risks signalling to employees that a test is in progress. The notification can lead to widespread awareness within the organisation. This distorts results as employees will alert each other about the ongoing simulation. Such interference undermines the validity of the assessment, making it impossible to accurately measure who might have fallen victim in a real scenario.

- Emotional interference. Immediate feedback often triggers what’s known as amygdala hijack—a stress response that impairs learning. When employees focus on the embarrassment or anxiety of being ‘caught’, their ability to absorb and retain the intended lesson significantly diminishes. The emotional reaction to failure overshadows the teachable moment.

We recommend deploying the interactive learning moment within 24 hours of a failed assessment. This delay ensures that:

- Employees have time to process the event without the heightened emotional stress.

- The teachable moment is preserved as a genuine opportunity to learn and isn’t overshadowed by an immediate emotional response.

- The integrity of the assessment metrics remains intact, which allows the organisation to evaluate its vulnerabilities more accurately.

This approach strikes a balance between timely education and effective assessment.

References

Anderson, JR & Schooler, LJ (1991). Reflections of the environment in memory. Psychological science, 2(6), 396–408.

Carvalho, PF, McLaughlin, EA & Koedinger, K (2017). Is there an explicit learning bias? Students beliefs, behaviors and learning outcomes. In CogSci.

Chi, MTH, Roy, M & Hausmann, RGM. ‘Observing tutorial dialogues collaboratively: Insights about human tutoring effectiveness from vicarious learning’. Cognitive science 32.2 (2008): 301–341.

Chi, MTH & Wylie, R (2014). The ICAP framework: Linking cognitive engagement to active learning outcomes. Educational psychologist, 49(4), 219–243.

Ho, G et al. ‘Understanding the Efficacy of Phishing Training in Practice’ in 2025 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 2025, pp. 76–76, doi: 10.1109/SP61157.2025.00076.

Koedinger, KR, Kim, J, Jia, JZ, McLaughlin, EA & Bier, NL (2015, March). Learning is not a spectator sport: Doing is better than watching for learning from a MOOC. In Proceedings of the second (2015) ACM conference on learning@ scale (pp. 111–120).

Kumaraguru, P, Cranshaw, J, Acquisti, A, Cranor, L, Hong, J, Blair, MA & Pham, T (2009, July). School of phish: a real-world evaluation of anti-phishing training. In Proceedings of the 5th Symposium on Usable Privacy and Security (pp. 1–12).

Reinheimer, B, Aldag, L, Mayer, P, Mossano, M, Duezguen, R, Lofthouse, B ... & Volkamer, M (2020). An investigation of phishing awareness and education over time: When and how to best remind users. In Sixteenth Symposium on Usable Privacy and Security (SOUPS 2020) (pp. 259–284).