Welcome to the second installment of our two-part blog series where we answer the most frequently asked questions about the 2024 State of the Phish Report. In our previous article, we answered questions related to the threat landscape findings. Here, we answer questions related to user behaviors and attitudes, as well as how to grow your security awareness program.

One of the most interesting findings that came out of the 2024 State of the Phish report was the fact that 71% of users admitted to engaging in a risky action and 96% of those users understood the risk. This suggests that people are not acting out of ignorance. Despite knowing that their actions could compromise themselves or their organization, people chose to proceed anyway.

This information is crucial for the growth of any security awareness program. It enables organizations to tailor their efforts. By observing and analyzing how users interact with security policies, organizations can identify knowledge gaps and areas of resistance. When you engage users in this manner, you not only educate them but also transform them into active participants in protecting your organization.

96% of users who took a risky action knew that it was risky. (Source: 2024 State of the Phish from Proofpoint.)

Our findings inspired hundreds of questions from audiences across the world. What follows are some of the questions that repeatedly came up.

Frequently asked questions

What are some ways to get users to care about security and get engaged?

Two-way communication is key. Take a moment to explain to your employees why you’re running a behavior change program, what the expectations are and what projected outcomes you foresee. Make it personal. Let people know that cybersecurity isn’t just a work skill but a portable skill they can take home to help themselves and their families be safer in life.

Keep your employees up to speed on what’s happening in the current threat landscape. For example:

- What types of threats does your business see?

- Which departments are under attack?

- How does the security team handle these incidents?

- What can people do to defend against emerging threats that target them?

Research for the 2024 State of the Phish report found that 87% of end users are more motivated to prioritize security when they see increased engagement from leadership or the security team.

In short: You need to open up the lines of communication, listen to your employees and incorporate their feedback, and establish a security champion network to help facilitate communication more effectively.

Any ideas on why the click rate for phishing simulations went up for many industries this year?

There may be a few possible reasons. For starters, there has been an increase in the number of phishing tests sent. Our customers sent out a total of 183 million simulated phishing tests over a 12-month period, up from 135 million in the previous 12-month period. This 36% increase suggests that our customers may have either tested their users more frequently or tested more users in general. Also, some users might be new to these tests, resulting in a higher click rate.

Regardless, if you are conducting a phishing campaign throughout the year, the click rates of phishing tests are expected to go up and down because you want to challenge your employees with new attack tactics they have not seen. Otherwise, the perception would be, “Oh, this is the face of a phish,” if you keep phishing your users with the same test.

At Proofpoint, we use machine learning-driven leveled phishing to provide a more reliable way to accurately assess user vulnerability. This unique feature allows security teams to examine the predictability of a phishing template and obtain more consistent outcomes while improving users’ resilience against human-activated threats.

People need to understand how attackers exploit human vulnerability. Phishing tests should reflect reality and be informed by real-world threats. They are designed to help people spot and report a phish in real life and to help security teams accurately identify vulnerable users who need additional help. However, it is crucial to keep in mind that the click rate of phishing tests alone does not and should not represent the impact or success of your program.

We have found users spreading the word that phishing simulations are underway, which reduces the effectiveness of these tests. What is the best way to address this issue?

Here again, the best approach is to communicate with your employees. Let them know why it is important that they refrain from alerting others to phishing tests, even if they think they are helping them. What you do need is for them to help you maintain the integrity of the tests so that people can learn to identify an actual phish. Together, you can help improve your organization’s security posture.

One thing worth mentioning is that if your organization is operating under the umbrella of a negative consequence model, such as a job termination or bonus reduction, it could be more difficult for you to stop tipping from happening. People want to save their friends, even if they can’t save themselves.

How often do you recommend running phishing simulations?

We recommend running phishing simulations every four to six weeks. However, you should find the right cadence for your organization by gauging internal buy-ins and other variables. The point is that you don’t have to phish your entire user base every time. If you try to stay on the four to six-week cadence, you can at least reach everybody a few times throughout the year.

How can leadership be convinced that a security awareness program needs to be made available for everyone, including contractors?

Every employee can impact a company’s security posture, positively or negatively. Contractors are often brought in for specialized purposes, and they end up having access to critical data or systems. They are not given a corporate email account and use their own personal devices, meaning the data handled by contractors is not protected by the same level of security controls as the rest of the company.

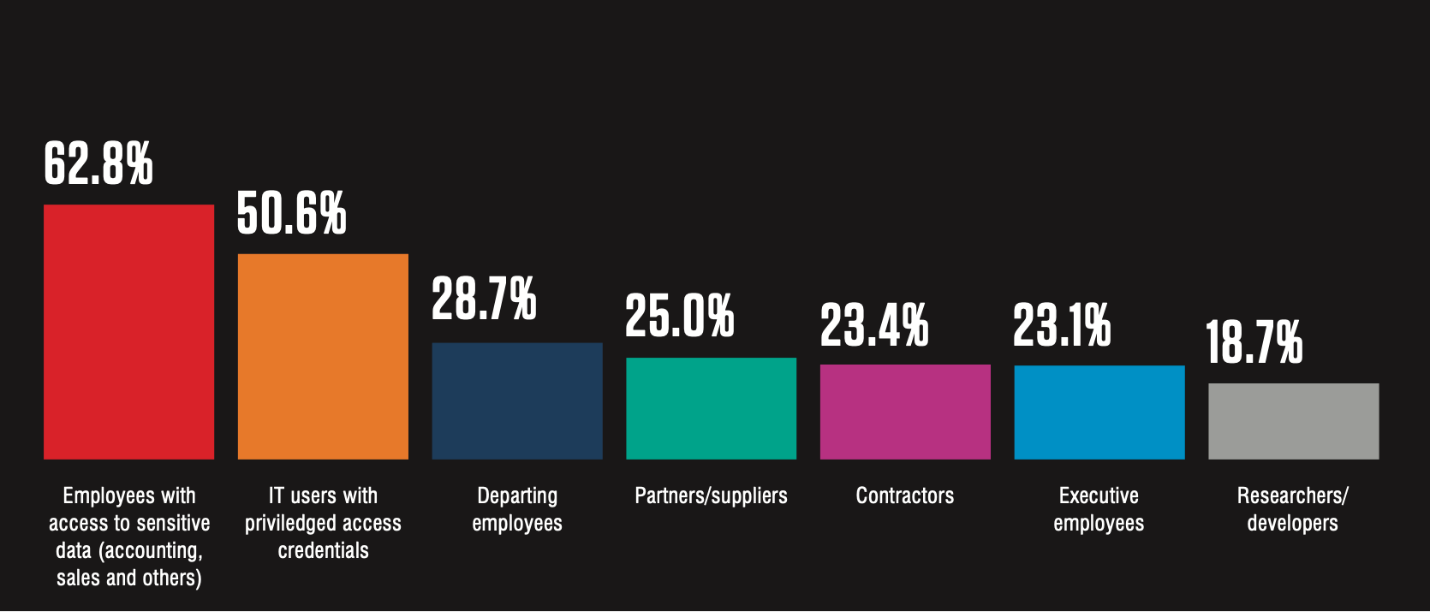

In the 2024 Data Loss Landscape report from Proofpoint, 23.4% of surveyed respondents said that they think contractors pose the greatest risk for data loss incidents. If contractors lack foundational security knowledge—security hygiene, data management and email management principles—they could pose a significant risk to an organization.

23.4% of surveyed respondents said contractors pose the greatest risk for potential data loss incidents for their organizations. (Source: Proofpoint 2024 State of the Phish report.)

What advice do you have for training executives and other highly targeted users?

Executives are busy. They often believe that they know cybersecurity well and don’t need the same training as average users. This may be true. Therefore, you need to choose the right topics and the right information when you try to engage executives.

For example, executives travel a lot. When they travel, where do they have conversations? Perhaps at hotels, restaurants, meeting rooms or coffee shops. Who are in those areas? You want to remind executives how easy it is for someone to target them and their families. Ask them to be careful of what they share with their friends and family online. Tailor communications and training to them.

For highly targeted users, show them how they are being targeted. For instance, show people in the human resources department how they are targeted by payroll redirect scams, and show people in finance how many threats are seen that involve fake invoices.

Make the connection between the threat landscape and the individual’s role. By making information about potential threats relevant to your audience, you will be more likely to get their attention.

Why is punishment for risky behavior not recommended?

Punishment does not drive sustainable behavior change. It might halt unwanted behavior in the short term, but it creates a bigger risk for the organization in the long run.

When organizations punish employees for failing phishing tests by firing them, holding back their bonuses or cutting their paychecks, the FUD (fear, uncertainty and doubt) situation creates a low-trust atmosphere. People don’t believe they can go to the security team if they have questions. Instead, people will try to hide their mistakes or handle them on their own to avoid any trouble.

As Florian Herold, an assistant professor of managerial economics and decision sciences at the Kellogg School of Management, said, “It’s much easier to change an established behavior by offering rewards, rather than threatening with punishments.”

As a security pro, your goal is to help end users build sustainable security habits that can protect them and the business. You want to help people reflect on how they feel about the impact of their behaviors and choices, so they can take responsibility for their actions.

However, a punitive approach to security awareness only makes people fear security—and feel bad about themselves. They lose an opportunity to learn a life skill that would benefit them. And you lose an opportunity to build trust with your employees and build a strong line of defense.

How do you reward employees when you have a limited budget?

You can reward people without monetary incentives. Here are a few examples that we have learned from security awareness practitioners:

- Emails to managers and teams citing strong team security performance

- Badges awarded or other recognition given during all-hands meetings to people who have helped secure the organization

- Lunch with executives

- Gamification—using a leaderboard game to get people engaged

- A thank-you note from the chief information security officer (CISO)

How do you suggest training users for phishing awareness given that generative AI has made it more difficult to detect?

Generative AI has benefited threat actors by helping them overcome language and cultural barriers in phishing and BEC attacks. While AI can correct grammatical errors and misspellings in the email body, several hooks will likely be present in an AI-generated phish. They include:

- A sense of urgency

- Requests for sensitive or personal information

- Emotional appeal, such as fear, anger, excitement and even sympathy (we see that a lot in charity scams)

- A mismatch between the sender display name and the sender email address or URL destination

- Lookalike domains

Attackers can use generative AI to craft more convincing lures with no grammatical errors or typos, but the “red flags” listed above still hold true. And end users empowered with the right knowledge and tools will be able to pick them up.

Learn more

Want to learn more about why users engage in risky behaviors and how to help them make security a priority? Download our 2024 State of the Phish report.

Proofpoint is focused on helping organizations drive behavior change and building a positive security culture. Check out our Security Awareness solution brief to learn more.